- 2022-09-30

- Cybozu Labs Machine Learning Study Group

- How it works.

- I’m not talking about usage or business value.

- Objective: “Reduce black boxes.”

- At first, I wrote “eliminate the black box,” but if you dig in depth-first search until the black box is gone, you won’t finish even after 10 hours, so you’ll use width-first search.

How Stable Diffusion works

- a rough overall view

- There are three main components.

- diffusion model (Denoising Diffusion Probabilistic Models, DDPM)

- Basically, the image is generated by repeatedly using the “noise removal mechanism”.

- Prepare images x collected from the Internet and y with noise added to them, and train a “neural network that produces x when y is input”.

- When this is applied to pure noise, a new image is created

- The actual values during the Stable Diffusion process were extracted and visualized.

- The far left is pure noise, repeated noise removal, and it’s a cat.

- High-Resolution Image Synthesis with Latent Diffusion Models

- latent diffusion model

- Meaning of this latent

- Encode the image not in the space of the image itself, but in a low-dimensional space (latent space) using VAE, and then decode it using a diffusion model.

- We’ll talk about VAE later.

- Stable Diffusion’s standard setting is a 4x64x64 dimensional tensor.

- Finally, I’m using this VAE decode to convert it back to a 512x512 RGB image.

- The space has shrunk from 800,000 dimensions (3 x 512 x 512) to 20,000 dimensions (4 x 64 x 64), making learning more efficient.

- latent diffusion model

- [Denoising Diffusion Implicit Models]

- Denoise process is now 10-100 times faster

- In the past, models denoise one step at a time.

- This is done in multiple steps using the implicit method

- The idea is that if you can guess Y with acceptable accuracy “the result of doing process X 20 times”, you should do process Y instead of doing process X 20 times.

- In fact, the standard Stable Diffusion setting of 1,000 steps of noise reduction is done in 50 steps of 20 steps each.

Question so far

- Q: What does Stable mean?

- A: Just a name, like saying what is Dream in DreamBooth, just a project name

- Q: I can’t seem to get rid of the noise or stabilize it at all.

- A: I don’t know why I chose this name because I am not the author.

- B: I wonder if it was named in the atmosphere that it produces a stable and beautiful picture.

- Q: What is a prompt?

- A: A string of characters to be entered, explained in detail below

- The world recognizes it as a tool where you put text in and a picture comes out, but the explanation so far still doesn’t explain where you put the text in.

- Q: Are you saying that the denoising process itself is not prompt-related?

- A: It’s not about prompts, or…

- The way the diffusion model works to generate an image is by denoising from just noise to create an image.

- The denoising part is a conditional probability, which can be modified by specifying conditions, and the prompt string can be inserted there as a condition.

- PS: This condition parameter does not have to be textual, in fact, the paper experiments with various types of conditions.

- The world just buzzed about the “just put in a text prompt and you get a picture!” is just a buzzword that can be used by people with no knowledge of the pathway.

- A: A string of characters to be entered, explained in detail below

- Q: Does the 4 x 64 x 64 in Tensor mean there are 4 pieces?

- A: It means there are four cards; it can be taken as having four channels.

- Q: What is 3 x 512 x 512?

- A: 3 channels of RGB

- Q: Why don’t we do the 1000 steps all at once, but instead do 20 steps 50 times each?

- The more you put a lot together, the bigger the “discrepancy from when you did it right one step at a time”.

- Formulatively, we can make an update formula that does 1,000 steps at a time, but this is an estimate, so the error will be large.

- If the error becomes too large, there is no practical benefit.

- The trade-off between time and accuracy is that about 20 times is just about practical.

(computer) prompt

-

The text to be entered when generating the image.

-

Divided into subwords, then one token (often one word) becomes a vector of 768 dimensions.

- If you enter a word that is not in the dictionary, such as bozuman, a word is split into three tokens.

- When the prompts are split into tokens at a time when there are more than 77, they’re truncated to a fixed length.

- Padding with 0 if short

-

It will be a 77 tokens x 768 dimensional tensor.

- Stable Diffusion’s prompt will be a 77 x 768 dimensional tensor

- Vertical stripes appear to be vertical because variants of Positional Encoding are added.

- This is done by [CLIP]

- Not learned in Stable Diffusion

- They just take an existing published model and use it as a component without modification.

- Learning of the task of mapping images and sentences (guessing which images and sentences are paired) in a nutshell

- There are 5 images and 5 texts, which one is the pair? something like that.

- Both images and text can be projected onto a 768-dimensional vector to compute cosine similarity.

- Various projects are using parts of the model because it has been trained on a large scale and the model is publicly available.

- Stable Diffusion only used to embed prompts

- Not learned in Stable Diffusion

-

Go to attention mechanism for information on prompts during denoise

- What size exactly is the attention mechanism?

- The noise reduction process is specifically U-Net, and since it is difficult to explain the whole process because the attention mechanism extends from each layer, I will extract one part and explain it.

- The prompt has 77 vectors of 768 dimensions.

- I have it converted to 300 dimensions each with the appropriate neural net.

- This is the set of keys K and the set of values V

- Information taken from somewhere else and converted to 300 dimensions, this is the query Q

- Matmul Q and K. In essence, it is equivalent to taking the inner product of each vector.

- And if you put it in Softmax, the value where the direction of the vector is close to the direction of the vector will be larger.

- This is the attention weight

- If one of the places were to be 1, then the matmul with the next V would effectively be equivalent to “choose that one”.

- In other words, “which of the 77 tokens to pay attention to and which to ignore” is contained in this value

- This is how we create value, mixing only the parts we pay attention to.

- The mechanism itself is a so-called “attention mechanism” and does nothing mundane or StableDiffusion-specific.

- U-Net model for Denoising Diffusion Probabilistic Models (DDPM)

- https://nn.labml.ai/diffusion/ddpm/unet.html

- Simply put, U-Net is a group used for tasks that take images as input and output images.

- A mechanism to pass through a low-resolution, high-channel count representation once

- I’m copying and pasting the information before it goes through that thin space.

- Gray arrows and white squares

- Reproduce high-resolution information using both information that has been passed through low-resolution space and information that has not been passed through space.

- It is my understanding that this pasting together also adds information from the caution mechanism, I could be wrong.

- In the figure above, the 572 x 572 330,000 dimensional information is once a small 28 x 28 image.

- There are 1024 channels, which increases to 800,000 dimensions.

- The highly abstract information gathered from a wide range of images would be packed into this deep channel.

- Passing higher dimensional information through a lower dimensional space is a concept related to autoencoders and others, whereby “trivial information” that is not necessary for recognition, etc., can be discarded.

- There was no dimensional reduction.

- It’s said to reduce spatial resolution and discard trivial high-frequency components, etc.

- The dimensionality in the middle is not reduced, so if you let the auto-encoder-like task of restoring the original image be done, it will restore it perfectly.

- The network in the figure is illustrated with an example of a segmentation task

- If it’s that kind of task, I wonder if a lot of channels are used effectively because it’s a problem that can’t be solved even if every single pixel in the image is discrete.

- Segments are physically localized

- There are no segments scattered on the screen.

- = High-frequency components of pixels are less likely to be affected

- To get results that fit these patterns of human cognition, they are low-resolution and discard components in the spatial frequency direction.

- There are 1024 channels, which increases to 800,000 dimensions.

-

guidance_scale

- I saw this in the parameter list and wondered, “What is it?” I guess a lot of people wondered.

-

guidance_scaleis defined analog to the guidance weightwof equation (2) of the Imagen paper: https://arxiv.org/pdf/2205.11487.pdf - Compute the unconditional noise prediction and the noise estimate when there is no prompt, respectively

- The difference is then multiplied by a factor and added together, i.e., this parameter determines how much prompting is important.

- I thought it was supposed to be 0 to 1 from the form of the formula, but the default value is 7.5

- Applied with more emphasis on the impact of the prompt than the actual estimation.

- In NovelAI, this second term, unconditional noise prediction, has been replaced by negative prompt conditional noise prediction.

- It makes the effect of negative prompts opposite to the normal prompts.

-

This is an article explained for unexplored juniors, once we move on to this page to explain

-

-

The world recognizes it as a system that generates an image with a prompt (text), but the prompt is immediately embedded in the vector space, so it can be added and scalar multiplied.

How img2img works

- Last time I did text to image, the initial value was just a random number.

- In this method, the image given by a human is embedded in the latent space, and then a little noise is added to it to make it an initial value.

- Denoise the original image about 75% of the time, for example, and then denoise 75% of the times.

- The strength of the noise and the number of denoise are both determined by strength.

- Less noise will be closer to the original image.

- The greater the noise, the greater the change.

- The parameters of the picture in this commentary are as follows

- prompt: “cat, sea, wave, palm tree, in Edvard Munch style”

- strength: 0.75

- Stable Diffusion Latent Space Visualization

- Last time I did text to image, the initial value was just a random number.

- I’ve tried different STRENGTH.

- Strength was increased from 0.6 to 0.99 in 0.01 increments.

- step: 0.1” in the image is a typo for “step: 0.01

- The greater the amount of noise, the freer you draw, ignoring the original picture.

- On the top left, much closer to what I wrote.

- Down on the right, there’s a cat missing, a palm tree turned into a cat, a cat buried in sand, and a freebie.

- Q: What’s going on with the prompts?

- A: All in common, prompt: “cat, sea, wave, palm tree, in Edvard Munch style.”

- Edvard Munch is Munch from Munch’s The Scream.

- The way you painted the sky gives it a Munch look.

- The first image I gave you was painted flat with a single color, which is reproduced in the upper left, but the lower right, the more complex the sky paint becomes.

- A: All in common, prompt: “cat, sea, wave, palm tree, in Edvard Munch style.”

- What I think I failed to do during this experiment is not fixing the random seed when I put noise on it.

- That’s the reason for the wide variation in results.

- Maybe it would have been better to fix it and experiment with it so we could ignore that factor.

- Since the lower right corner is 99% noise, I am rather surprised that the composition of sand in the foreground and the sea in the background can be maintained.

- Enlargement of cat. part of 0.6 unit

- I drew it just right, and the shape of the ears was “Is that a cat? I drew it properly, but it has been corrected to a decent ear shape, which is good.

- Experiment with hand-drawn diagrams and see what happens.

- It would be nice if you could neatly clean up the hand-drawn diagrams.

- but so far I haven’t found a good prompt.

-

-

I’m redrawing it with 90% noise, but it’s hard to tell the difference.

-

-

-

This area is clean, but w

- It’s too detailed for most people to understand.

-

And conversely, letters and other characters are garbled into other characters (5 becomes S, etc.).

-

- There’s a handwritten style image out there.

- I wish I could get a chart finished with exactly straight lines and arcs, such as path drawings.

- I hope you can find a prompt to put out something like that…

- There’s a way to get them to learn that kind of style through fine tuning.

inpaint

- How Stable Diffusion Inpaint works

- It’s not “the ability to redraw only the unmasked areas.”

- That’s a crude explanation for the general public.

- It’s not a “don’t change anything that’s masked, just make the rest of it so that the boundaries add up.”

- I assumed that was the case at first, too, but when I looked into it more closely I found that it wasn’t, due to behavior that was contrary to my understanding.

-

What’s wrong with the masked area that doesn’t seem to be maintained? (9/12/2022)

- God part is masked. The top and bottom margins are unmasked.

- I expected God to be the mom of the original painting and the margins to be painted appropriately, but that didn’t happen.

- Q: Surprise the mask part changes.

- A: I know, the God part was rewritten like crazy, and I was like, “Is there something wrong with the way I’m using it or the code or something?” I was worried.

-

- It’s natural when you know how it works.

- There is not enough information to maintain the original image because it goes through the latent space once in between.

- The bench dog sample, which at first glance appears to maintain the masked area, but upon zooming in, you can see that the mesh pattern of the masked bench has changed.

- It just so happens that humans don’t pay attention to the pattern on the bench, so it’s hard to notice.

- Human attention is directed to the dog and cat in the center.

- Realistic workflow is synthesized again after generation

- Example of compositing in Photoshop

- Would the borders stand out if the mask image itself were used to compose the image?

- Tried: mask again after inpaint.

- There are seams, but the seams are learning to be closer together, so they weren’t as noticeable in this case.

- This process can be fully automated.

- It is up to individual human use cases to decide whether they prefer this treatment or not

- It doesn’t cost much, and realistically, it’s better to generate both.

- I didn’t explain in detail how it works.

- The white areas of the mask image “may be freely drawn.”

- The black area says, “Feel free to write whatever you want, but I’ll blend it with the original image with the appropriate coefficient.”

- Blended with the original image many times during the denoising process

- So the final black area will be “fairly close to the original image”.

- This process is of course done in latent space, so it is only “close in latent space”, 64x64 resolution

- A 64x64 image restored to 512x512 by VAE will not match the original image.

- In the example of the cat and God, this “returned image” was not of acceptable human quality.

- Because unlike a fence fence, God was “in the foreground” and “the focus of human attention.”

How img2prompt works

- methexis-inc/img2prompt – Run with an API on Replicate

- The name CLIP interrogator may be taking root.

- The Stable Diffusion txt2img we’ve been talking about is a mechanism for creating images from text.

- A separate project from Stable Diffusion is the task of inputting images and describing them

- There is a project and repository that is tweaking this a bit and doing a “make a prompt that looks good to put in Stable Diffusion”.

- In this example, a black cat with yellow eyes, colored pencil drawing, looks like it was drawn by some guy.

- And then there are all the little attributes, like “chalk drawing” or “charcoal drawing” or “charcoal style,” and then there’s the mysterious CC-BY license.

- First, create an image description in BLIP.

- [” a painting of a black cat with yellow eyes

- how it works

- Divide the image into sections and describe each part, then put them together.

- If you take it as a whole, it’s a black cat, and if you zoom in, yellow eyes, the description is generated.

- Other examples include “cat on a window sill” and “cats in a row”.

- So even though I only indicated “black cat”, there are more detail explanations than I indicated.

- The yellowing of the eyes is due to random seeding.

- After that, I’m doing a round-the-clock search for elements to add.

- For example, the list of writers’ names lists 5,219 names.

- Edvard Munch, Wassily Kandinsky, and many others I can’t think of that have been written.

- From here, we’ll do a brute-force search.

- Adopt the one that increases the similarity the most by sticking together.

- Opportunity to discover unfamiliar painters and style keywords.

- Examples: Discover the painter , [/c3cats/space cat](https://scrapbox.io/c3cats/space cat).

- Cosine similarity written at CLIP.

-

Both images and text can be projected onto a 768-dimensional vector to compute cosine similarity.

-

- A system that looks at the cosine similarity to see if it is close to the target image, and finds and combines keywords that are close.

- Note: This 768 dimension is different from the token’s embedding space, which happens to be dimensionally coincident.

- One step further, the “text and image are embedded in the same 768-dimensional space” space.

- Note: This 768 dimension is different from the token’s embedding space, which happens to be dimensionally coincident.

- For example, the list of writers’ names lists 5,219 names.

- BLIP alone produces a cosine similarity of about 0.2.

- If you have a 2-dimensional image, you may think “they are not similar at all”, but this cosine similarity is calculated in a 768-dimensional space.

- 768 dimensions, so less than one part per million.

- 0.22~0.24 depending on additional search

- important point

- CLIP’s 768 dimensional vectors are searched for increasing similarity in the space of 768 dimensional vectors

- It doesn’t increase the “likelihood of that picture being output when you put that prompt in.”

- That’s a totally different thing, it’s more of a Textual Inversion that I’ll explain next.

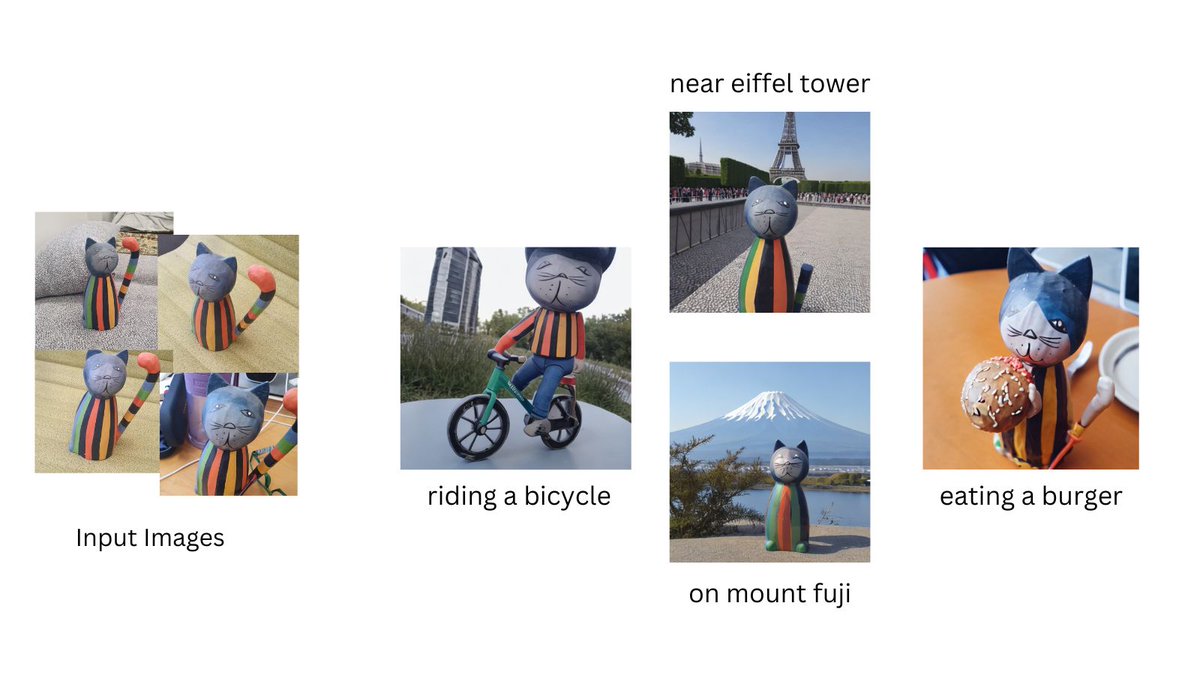

Textual Inversion

- Try Textual Inversion

-

- A system that feeds about 5 images A and waits for 1 hour to produce a 768-dimensional vector B.

- This vector is the same space as the prompt token embedding

- The space where I was working on interpolating between CAT and KITTEN and so on.

- Assign this to a token that is not normally used, such as *, so that it can be used in subsequent prompts.

- I haven’t delved into the details of the learning method, but in a nutshell, it is “learning to increase the probability that an image with features similar to A will be generated when an image is generated using a single word embedded in B as a prompt.”

- Initially, I was hoping that the cat pattern and bowman design would be reproduced, but I guess I was expecting too much.

- In the first place, we only get one vector of 768 dimensions.

- It’s absurd to use a single word-equivalent vector to describe something that doesn’t have a “compact vocabulary to describe it” like a bowman’s pattern.

- Useful when humanity makes distinctions in language but users are not able to express themselves in those distinctions.

- For example, users who can only express “a cat like this” with the vague concept of “cat.

- Anthropomorphic description of AI (metaphor):.

- AI: “This is an ORANGE CAT.”

- AI “but not tabby”

- AI “bicolor-like, but in different colors.”

- AI “orange bicolor!

- AI: “This is different, it’s GREEN EYES, not YELLOW EYES.”

- I compared it to linguistic thinking because it is anthropomorphic, but in reality, we don’t do this kind of linguistic thinking, but rather change the vector to determine if we are “close” or not.

- Due to the relationship through the “one-word meaning space,” which has only 768 dimensions, it is not possible to learn an image-like memory like the Cybozu logo.

- The vocabulary for cat patterns and the vocabulary for patterns of bowman-like objects is more extensive in the former.

- So the former is easier to do.

- In the first place, we only get one vector of 768 dimensions.

- Yesterday DreamBooth was released in an easy-to-use format.

-

@psuraj28: dreamboothstablediffusion training is now available in 🧨diffusers!

-

And guess what! You can run this on a 16GB colab in less than 15 mins!

-

- 16GB of VRAM, 15 minutes for fine tuning.

- Is the principle different from Textual Inversion and the facial design is maintained?

- Where we would like to try in the future

-

Q: In Stable Diffusion Embedded Tensor Editing, when I add the vectors of words together, do they not change continuously because the place to attach is deep?

- A: I think the branching off near the beginning of the process of going from noise to image is where the crass change occurs.

- It’s hard to verbalize an image.

- Basically, the prompt vector is almost the same, so if the input is the same, the output should also be almost the same

- However, when it crosses the divide, the difference widens, and as a result, the input values are further apart, so the difference widens even more… and so on.

- Q: I thought the picture was going to change a lot where the attention mechanism means attention changes a lot.

- A: As far as this experiment is concerned, the form of the prompt is identical and only the vector of one word in cat is changed smoothly, so I don’t think it means that the attention is changing rapidly and that is what is showing up in this behavior.

- I can’t say for sure because we don’t have visualization of attention yet.

- (Tip: Note that in Stable Diffusion, the default is “Attention to the 77 tokens in the prompt”, although you can change it in many ways, and it does not do anything about where in the image to focus attention.)

- Even though you’re interpolating between cat and kitten, since they’re both nouns, there shouldn’t be a big change in syntax, so the attension hasn’t changed much.

- B: I think you mean that the process of repeating the denoise 50 times diverges in the middle of the process, and the results change so much from there that what you end up with appears to be discontinuous.

- A: Yes, the mapping itself is not linear, so when you look at the result of stacking it over and over again, of course there are places where it behaves discontinuously, and that’s just the way it should be.

- Q: Is that like the butterfly effect (chaos theory)?

- A: I’m talking about something like the butterfly effect.

- Supplemental note:.

- The story of how a very small difference in initial values can lead to a large difference in the result when the difference is multiplied over and over again with an expanding map, an analogy to a hurricane in the US caused by a butterfly flapping its wings in Tokyo.

- In this case, to be precise, the initial values are exactly the same and the mapping is very slightly different. I’m just saying that error magnification can occur in this case as well, perhaps that’s what’s happening.

- Even if the effect of the prompt is small, if it changes whether you fall on the right or left side of the mountain, then the subsequent denoising moves you in the direction away from it!

- Image of a stream hitting a mountain and branching off.

- Q: Okay, so it developed in different ways to be like that.

- A: Yes, it is very possible that the inputs are not different but the outputs are very far apart, because it is non-linear.

- Supplemental note:.

Q: Interesting that you can add and subtract vectors like word2vec.

- A: You never know until you try, I thought I could do it, but my impression is that there are more non-sequential jumps than I thought.

- It is technically possible to erase the face using an inpaint mask, and then have the artist redraw it with instructions such as, “You said cat, but this part should be more like a kitten.

- But I think it would be less burdensome for humans to choose from 100 randomly seeded items rather than trying to manipulate such details for something that is difficult to control.

This page is auto-translated from [/nishio/Stable Diffusion勉強会](https://scrapbox.io/nishio/Stable Diffusion勉強会) using DeepL. If you looks something interesting but the auto-translated English is not good enough to understand it, feel free to let me know at @nishio_en. I’m very happy to spread my thought to non-Japanese readers.