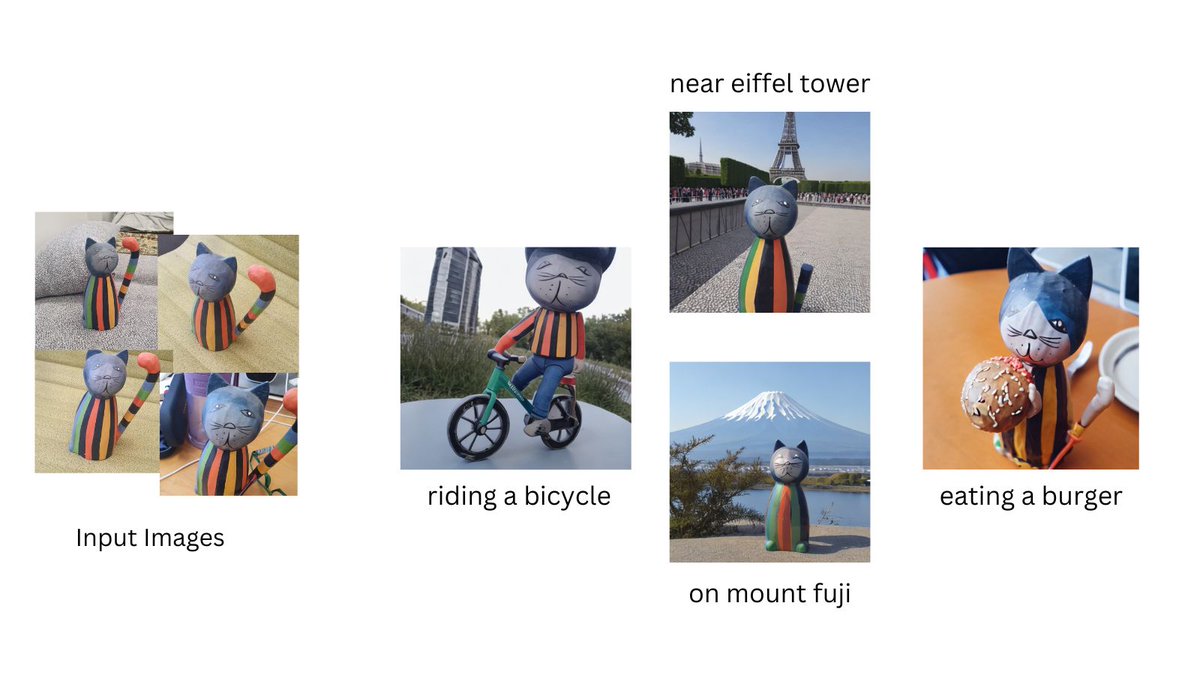

DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation https://arxiv.org/abs/2208.12242

- > [@psuraj28](https://twitter.com/psuraj28/status/1575123562435956740?s=21&t=nsEudpoT1W4dsOjQVkyQyg): dreambooth #stablediffusion training is now available in 🧨diffusers!

- > And guess what! You can run this on a 16GB colab in less than 15 mins!

- >

- 16GB of VRAM, 15 minutes for fine tuning.

- Is the principle different from Textual Inversion and the facial design is maintained?

This page is auto-translated from /nishio/DreamBooth using DeepL. If you looks something interesting but the auto-translated English is not good enough to understand it, feel free to let me know at @nishio_en. I’m very happy to spread my thought to non-Japanese readers.